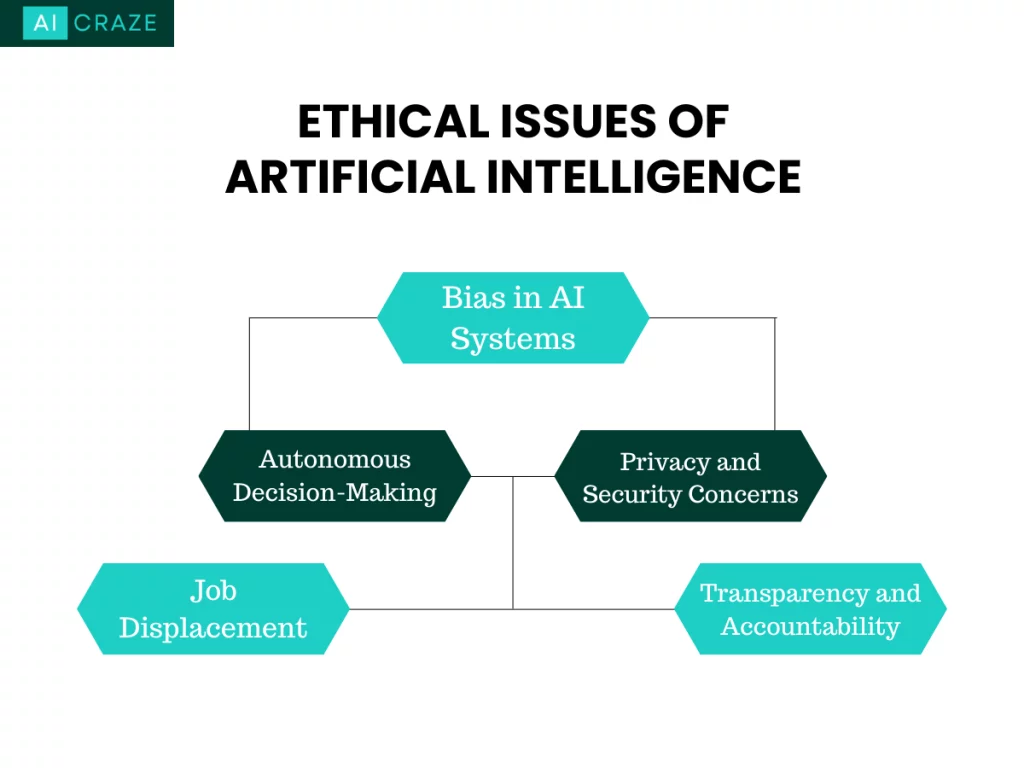

Artificial Intelligence (AI) has revolutionized the world we live in. From automating mundane tasks to making critical decisions, AI has transformed industries and made our lives easier. However, with the tremendous power AI brings, there come several ethical issues that need to be addressed. In this article, we will explore the ethical issues of artificial intelligence and why they are crucial in the modern world.

Introduction

AI is the ability of machines to perform tasks that typically require human intelligence, such as recognizing speech, making decisions, and solving problems. The development of AI has brought about several benefits, including increased efficiency, productivity, and accuracy. However, the use of AI also poses significant ethical challenges that need to be addressed. This article will provide an overview of some of the most pressing ethical issues of artificial intelligence.

Bias in AI Systems

Bias in AI systems is one of the significant ethical issues of AI that has garnered increasing attention in recent years. AI systems are only as good as the data they are trained on. If the data used to train AI systems is biased, then the AI systems themselves will also be biased. This can have serious consequences, particularly in areas such as employment, criminal justice, and healthcare.

There are several ways in which bias can enter into AI systems. One way is through the data used to train the systems. If the data used to train AI systems is biased, then the AI systems themselves will also be biased. For example, if an AI system is trained on data that contains predominantly male names, it may have difficulty recognizing female names. This could lead to discriminatory outcomes, such as excluding qualified female candidates from job interviews.

Another way bias can enter into AI systems is through the algorithms used to make decisions. Algorithms are mathematical formulas used by AI systems to make decisions based on data. However, if the algorithms are biased, then the decisions made by the AI systems will also be biased. For example, an algorithm used to screen job applicants may give preferential treatment to candidates with certain educational backgrounds, leading to discriminatory outcomes.

Bias in AI systems can have serious consequences, particularly in areas such as employment, criminal justice, and healthcare. For example, an AI system used by an employer to screen job applicants may discriminate against qualified candidates based on their race, gender, or age. This could lead to unjust outcomes and perpetuate existing social and economic inequalities.

Similarly, an AI system used in criminal justice could lead to discriminatory outcomes. For example, an AI system used to predict recidivism rates may be biased against individuals from certain racial or socioeconomic groups, leading to unjust outcomes.

Finally, bias in AI systems used in healthcare could have serious consequences for patients. For example, an AI system used to diagnose diseases may be biased against certain populations, leading to misdiagnoses and inappropriate treatments. Addressing this one of the ethical issues of AI systems requires a collaborative effort from governments, industry, academia, and civil society.

Autonomous Decision-Making

Autonomous decision-making is a term used to describe the ability of artificial intelligence (AI) systems to make decisions without human intervention. This can range from simple decisions, such as selecting the best route for a self-driving car, to complex decisions. Such as diagnosing medical conditions or making investment decisions.

While autonomous decision-making has the potential to revolutionize many industries and improve efficiency. It also raises significant ethical concerns that’s why it is included in the ethical issues of artificial intelligence. One of the main concerns is the lack of human oversight and accountability. If an AI system makes a decision that has negative consequences, who is responsible? This raises questions about liability and accountability.

Autonomous decision-making systems raise the apprehension of partiality and inequity. The competency of such systems is subject to the quality of the data that informs them. In cases where the data used to train the AI model is skewed toward a certain perspective. Or it is discriminative in nature, the AI system itself will be likewise biased. This could lead to discriminatory outcomes. Such as excluding certain groups from job interviews or denying loans based on factors such as race or gender.

Privacy is another concern with autonomous decision-making. There exists a potential danger of personal data infringement as AI systems amass and scrutinize extensive volumes of data. This could have serious consequences for individuals, particularly if sensitive information such as medical records or financial data is involved. Addressing this one of the ethical issues of artificial intelligence systems requires a collaborative effort from governments, industry, academia, and civil society.

Privacy and Security Concerns

As artificial intelligence (AI) systems become increasingly ubiquitous, concerns around privacy and security are becoming more pressing. AI systems often rely on vast amounts of data to make decisions, and this data can contain sensitive personal information. As a result, there are significant risks around data breaches, hacking, and other forms of cyberattacks. Therefore, Privacy and Security is one of the most important ethical issues of artificial intelligence.

One of the primary concerns with AI and privacy is the potential for personal data to be compromised. AI systems collecting and analyzing large amounts of data can put that data at risk of being accessed by unauthorized people or groups. This could lead to things like identity theft or fraud. Also, there is a worry that AI systems could be used to watch and track people by analyzing lots of data, like videos.

While this can be useful for law enforcement and national security purposes, it also raises significant privacy concerns. Addressing this one of the ethical issues of artificial intelligence systems requires a collaborative effort from governments and civil society.

Job Displacement

The rise of artificial intelligence (AI) is bringing significant changes to the job market. AI has the capability to generate job prospects and amplify work efficiency. Yet it can also lead to the displacement of employees in particular industries. Therefore, after Privacy and Security, it is one of the most important ethical issues of artificial intelligence.

One of the primary drivers of job displacement is automation. As AI systems get better, they can now do some jobs that people used to do. This can cause people to lose their jobs in areas like manufacturing, transportation, and customer service.

In addition to automation, AI systems are also capable of performing more complex tasks, such as data analysis and decision-making. This has the potential to impact jobs in fields such as finance, law, and medicine. Where human expertise has traditionally been highly valued.

The impact of job displacement will vary depending on the industry and the type of work being performed. Some workers may be able to adapt to new roles that are created as a result of AI. While others may need to develop new skills or seek out training to remain competitive in the job market. Addressing this one of the ethical issues of artificial intelligence systems requires a collaborative effort from governments, industry, academia, and civil society.

Transparency and Accountability

Finally, Transparency and Accountability is also one of the ethical issues of artificial intelligence. As artificial intelligence (AI) becomes more ubiquitous in our daily lives, there is growing concern about the lack of transparency and accountability in how these systems are designed and implemented. Unlike traditional software systems, which are typically developed by teams of programmers and engineers. AI systems often rely on complex algorithms that are difficult to understand and interpret.

One of the primary concerns around transparency in AI is the lack of visibility into how these systems make decisions. Because AI systems are often developed using machine learning algorithms, they are capable of learning from vast amounts of data and making predictions or decisions based on this data. However, the exact processes and inputs that lead to these decisions may not always be clear.

When we don’t know how AI systems work, it can be a big problem. Especially in areas like healthcare and finance where decisions made by AI can affect people’s lives directly. Without transparency and accountability, it can be difficult to ensure that these decisions are fair and unbiased. And that they are made in the best interests of the individuals or organizations involved. Addressing this one of the ethical issues of artificial intelligence systems requires a collaborative effort from governments, industry, academia, and civil society.

Conclusion

AI has the potential to bring about tremendous benefits. But it also poses significant ethical challenges that need to be addressed. The ethical issues of AI, including bias, autonomous decision-making, privacy and security concerns, job displacement, and transparency and accountability, are complex and multifaceted. Addressing these challenges will require a collaborative effort from governments, industry, academia, and civil society. By working together, we can ensure that AI is used ethically in a way that benefits society as a whole.